“Empowering Imagination.”

This is the slogan for the world’s biggest gaming company, Roblox. A game where there’s over 100 million daily active users who all can create anything they want, thus the slogan they made. Many children all know this platform and have been playing for years. Adults also love the platform and play with others. Some of these users, like me, play and find online friends to escape reality for a little bit. Adults also have the power to bond with others.

And that’s the problem.

We all know there are some very disgusting adults out there who try to talk to children in horrifying ways. This has led to a huge safety concern on the platform, causing parents to worry about whether their children are really safe. This year, Roblox has faced massive criticism after banning and threatening to sue a man who goes by the name Schlep, a content creator protecting the community from bad actors. Now, they face multiple lawsuits from many states and families, which has now pushed them to introduce a new required feature.

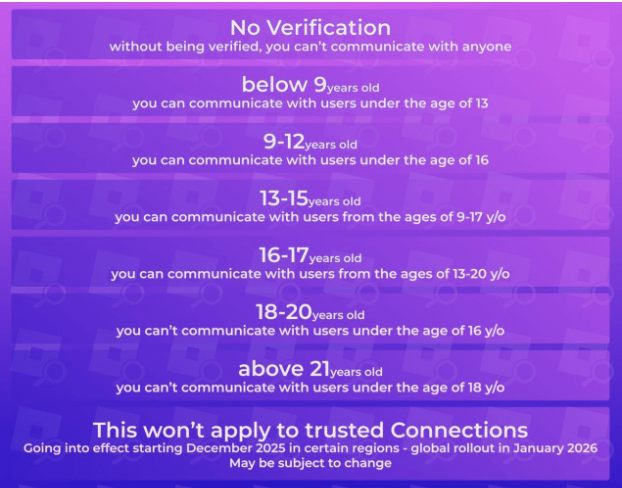

The new feature involves using AI to verify the user’s age. The said user takes a picture of their face and then places them into what Roblox calls “Age Groups”. So, for example, you’re 18 and do the face ID. Roblox will place you into the 18-20 age group, which means you cannot talk to anyone below the age of 16. CEO of Future of Privacy Forum, Jules Polonetsky, says that “Roblox deploying this privacy preserving implementation of thoughtful age assurance for its uniquely mixed audience of youth and adults will strengthen protections for younger players while respecting user rights.” Sounds really safe right?

Well…no.

The Problems, and Dangers

There are many, many problems with this update. The primary issue is that an AI is used to tell people’s faces. This already has led to huge backlash within the community, who are questioning how accurate the AI really is. Some question about their privacy, but Roblox has reassured users that the photo ID users have taken gets instantly deleted after the verification check.

Here is where I talk about my story involving this verification. On 3 December I did the age check to test out how trustworthy it really is. At first, they scanned me as 13+, which is correct as I’m 14. However, only a few hours later, they detected me as 18 and placed me into the 18-20 category. At first it may sound amazing as you get to swear at others and have more freedom. But the more you think of it, the worse this really is.

I have around 40 online friends that I talk to everyday. They’re all around 13-15 and with me being put into the 18-20 group this is a nightmare. I cannot communicate with them unless I call them from a different site, for example Discord. But according to Roblox TOS, you cannot direct users off-platform, which is what Schlep allegedly did. Roblox calls this ‘unsafe’, however many users still do this anyway. A friendly reminder that Discord also faces huge backlash about their child safety regulations. There are many other users who have been placed into the wrong category. One of my online friends is 19 and was detected to be 28, showing how inaccurate this AI really is.

Another huge problem is that on Roblox, there are many games that have the genre roleplay and strategy in it. Both of these require huge amounts of communication between players and with these age groups, some may be unable to talk to each other. These games will suffer the most from this update and will lose a lot of income.

The other problem, the most concerning, is how pedophiles will abuse this. They are very likely to target specific age groups, which they will probably go online and find a stock photo of a child and force themselves into that category. There are many videos on social media where people can use a stock image of an adult and the AI will place them into older age groups. Pedophiles can easily do the opposite, which is very dangerous. A Reddit user pointed out that older people can’t report these issues if they cannot see these bad actors interacting with children behind the scenes. Pedophiles can also go online and buy specific Roblox accounts with age groups they want to target, which is very, very concerning.

Roblox also seems to be treating the whole 18+ community as dangerous people towards younger players. I have a friend who’s 18 and they’re completely against this update, because she isn’t a weird person like some people. It shows how not every single player who is an adult is here to do illegal things. Another thing to point out is that teenagers can also sexually harass younger players and makes this very dangerous if the older, more mature players cannot see what they’re typing to the younger ones.

What now?

Even if this update sounds terrible, it does show that Roblox is trying their best to protect the community. I still personally believe this feature needs significant improvement. The online world will always have adults who are there to cause harm and these companies need to protect the younger users. Roblox here have thought thoroughly about this after the criticism they have faced after the Schlep ban, but they still have not done enough. For example, Roblox seems to be too lazy to find new moderators to help protect the platform. There are over 100 million daily active users and Roblox only has 8,000 moderators scanning the platform. In contrast, Tiktok has around 80 million daily active users and they have nearly 40,000 moderators. That is five times the amount of moderators Roblox has and they have more users on their platform everyday, which just shows how unprepared they are towards addressing the safety of the platform.

Roblox also has started to completely avoid listening to their own community and users now call them too ‘corporate’. This is clearly seen after their update on friends, where they changed the name to ‘connections’, which personally is an absolutely stupid idea.

I have never heard someone call a friend a ‘connection’. So when we unfriend someone Roblox wants us to call it ‘disconnect’?

Roblox can still fix everything just by listening to the community. They also should try to hire more moderators to help protect the users from bad actors. From what I can see, Roblox is prioritising money over its community and safety, which is completely wrong. If Roblox thinks about the users who help the company earn money, then this AI face check feature won’t need to be ever implemented.

What users can do now

As of now Roblox doesn’t seem to want to remove this feature at all. The AI checks are now being recommended to everyone as of December 2025. The update is expected to fully roll out in January next year. Here’s how users can do the check:

- Open Roblox

- Head to the settings and click ‘account info’

- In the personal area, there should be a button telling you to do the check

- Complete the check

- Roblox should’ve given you the age group once you’ve completed (as mentioned earlier, may be wrong)

- Users wishing a correct age group can do the real ID check

The future of Roblox

Roblox doesn’t just face this as an issue. They face thousands of lawsuits from both the public and the government. However, this doesn’t mean the platform will get shut down, the game still thrives and is still the world’s most popular game. The company just needs to find a way to connect with its community again and it may get better from here.

By Kingsley

Leave a comment